(CeltStudio/Shutterstock)

The smoke is still clearing from OpenAI’s big GPT-5 launch today, but the verdict is starting to come in on the company’s other big announcement this week: the launch of two new open weight models, gpt-oss-120b and gpt-oss-20b. OpenAI’s partners, including Databricks, Microsoft, and AWS, are lauding the company’s return to openness after six years of developing only proprietary models.

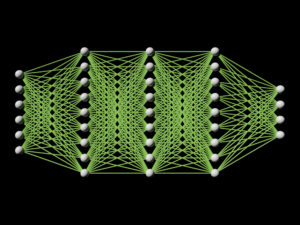

OpenAI’s two new language models, gpt-oss-120b and gpt-oss-20b, feature roughly 120 billion parameters and 20 billion parameters, respectively, which make them relatively small compared to the biggest trillion parameter models currently on the market. Both gpt-oss models are reasoning models that utilize a “mixture of experts” architecture. The larger model can run on a standard datacenter class GPU while the smaller one can run on a desktop computer with just 16GB of memory.

OpenAI says the bigger model achieves “near-parity” with its o4-mini model on core reasoning benchmarks, while running efficiently on a single 80 GB GPU. “The gpt-oss-20b model delivers similar results to OpenAI o3‑mini on common benchmarks and can run on edge devices with just 16 GB of memory, making it ideal for on-device use cases, local inference, or rapid iteration without costly infrastructure,” the company says in its blog post announcing the models.

According to OpenAI launch partner Cloudflare, OpenAI has packed a lot of capability into relatively small packages. “Interestingly, these models run natively at an FP4 quantization, which means that they have a smaller GPU memory footprint than a 120 billion parameter model at FP16,” the company writes in its blog. “Given the quantization and the MoE architecture, the new models are able to run faster and more efficiently than more traditional dense models of that size.”

The two models feature 128K context window and provide adjustable reasoning levels (low/medium/high). They’re English-only and only work on text, as opposed to being multi-model, such as other open weight models, like Meta’s Llama. However, as open weight models distributed under an Apache 2.0 license, customers will be able to adopt these and run them wherever they want. Plus, customers will be able to fine-tune the models to provide better performance on their own data.

Databricks is a launch partner with OpenAI for gpt-oss-120b and gpt-oss-20b, which are already available in the company’s AI market. Hanlin Tang, Databricks’ CTO of Neural Networks, applauded the launch of the two new models.

You can read the full model card for gpt-oss models here

“We’ve embraced open source and open models for a very long time, from Meta’s Llama models to some of our own models in the past, and it’s great to see OpenAI kind of joining the open model world,” Tang said. “With open AI models, you get a lot more transparency into how the model operates. And importantly, you can heavily customize it because you have access to all of the weights.”

Tang is excited for Databricks’ customers to start playing around with gpt-oss-120b and gpt-oss-20b models, which OpenAI benchmarks indicate said are some of the most powerful open weight models available, he told BigDATAwire.

“We’re still testing. It’s still early days. Some of these models take a week or two to really breathe and flesh out to know exactly where their performance is, what they’re good at, what they’re bad at,” Tang said. “But the early signs are pretty promising.”

As mixture of experts (MoE) models, the new models should be really good for low latency use cases, such as agentic applications, chatbots, and co-pilots, Tang said. Those are very popular types of AI applications at the moment, he said, with the third most popular type being batch-style text summarization of PDF documents and unstructured data.

While the new open weight models are text-only (so they don’t support reading PDFs), Tang expects them to excel at batch workloads too. As for the co-pilot use case, which perhaps has the tightest latency requirements, Tang said, “We still need to play around a little bit more to just understand just how good it is at coding.”

Microsoft is also a backer of OpenAI’s newfound appreciation for open weight models. “Open models have moved from the margins to the mainstream,” Microsoft wrote in a blog post. “With open weights teams can fine-tune using parameter-efficient methods (LoRA, QLoRA, PEFT), splice in proprietary data, and ship new checkpoints in hours–not weeks.”

Open weight models like gpt-oss can be fine-tuned for better performance on customer data (Evannovostro/Shutterstock)

Customers can also distill or quantize the gpt-oss models, Microsoft said, or trim context length. Customers can apply “structured sparsity to hit strict memory envelopes for edge GPUs and even high-end laptops,” the company said. Customers can also inject “domain adapters” using the open weight models and more easily pass security audits.

“In short, open models aren’t just feature-parity replacements–they’re programmable substrates,” the company said.

AWS is also backing OpenAI’s and its work with open weight models.

“Open weight models are an important area of innovation in the future development of generative AI technology, which is why we have invested in making AWS the best place to run them–including those launching today from OpenAI,” Atul Deo, AWS director of product stated.

Most AI adopters are mixing and matching different AI models that are good at different things. The biggest large language models, such as GPT-5, are trained on huge amounts of data and are therefore quite good at generalizing. They tend to be expensive to use, however, and since they’re closed, they can’t be fine-tuned to work on customers’ data.

Smaller models, on the other hand, may not generalize as well as the bigger LLMs, but they can be fine-tuned (if they’re open), can run wherever customers want (which brings privacy benefits), and are generally more cost effective to run than big LLMs.

It’s all about finding and fitting particular AI models to the customers’ specific AI use case, Tang said.

“If super high quality really matters, they’re willing to pay a lot of money for a very high-scale proprietary model,” he said. “Is it one of the open weight models that fit right exactly where they need on quality and cost? Are they customizing it? So we see customers making a pretty broad set of choices and mixing both in a lot of what they’re building.”

Related Items:

Demystifying AI: What Every Business Leader Needs to Know

Databricks Wants to Take the Pain Out of Building, Deploying AI Agents with Bricks

What Is MosaicML, and Why Is Databricks Buying It For $1.3B?